Introduction and background

This paper describes a small qualitative interview study of practice managers and GPs in English general practices, investigating how feedback posted by patients to the NHS Choices website is used by practices in their quality improvement activity. Here, ‘quality improvement’ refers to the set of separate and integrated systems and activities that practices use to ensure safety and improve the quality, efficiency and effectiveness of the healthcare that they provide. It is closely aligned with the commonly-agreed definition of clinical governance: “the structures, processes and culture needed to ensure that healthcare organisations – and all individuals within them – can assure the quality of the care they provide and are continuously seeking to improve it.” (Department of Health 2011a)

First, the NHS Choices commenting system is described, and other current sources of performance data for general practices are discussed. Next the concept of patient feedback is put into a public policy context; then the literature relating to theories of impactful feedback, and the practical effect of feedback on doctor’s performance, is critically reviewed.

The research methods used in the study are described; results, with illustrative extracts from transcribed interviews, are reported; and finally the results are discussed with reference to the literature.

System description

NHS Choices is the main website for the NHS in England. It provides information to support self-care by patients and hosts directories of NHS organisations and services. Since October 2009, patients have been able to post comments about their general practice, which (after moderation) are viewable on the practice’s NHSC profile. Patients can leave a free-text comment, grade several aspects of the practice and state overall whether they would recommend the practice to a friend.

Comments are reviewed by NHS Choices moderators, and screened for abusive or offensive language, and information that may identify a specific patient or clinician. Comments which do not meet the site’s commenting policy (NHS Choices 2012) may be edited or rejected entirely.

When a comment is approved and posted to the practice profile, a nominated contact at the practice is alerted by email, and has the opportunity to post a response. Responses are subject to the same moderation policy as patient comments, but the moderators will not intervene to suggest changes to practice responses regarding tone or possible effects on reputation [John Robinson, Head of User-Generated Content, NHS Choices, personal communication]. Practices may also ask for a patient comment to be reviewed again, and if necessary it may be further edited or removed by a moderator.

Patients must supply a valid email address, to which a confirmation message is sent before the message is accepted; they do not need to supply any other identifying information, and may comment pseudonymously or anonymously. As a result, it is not possible directly to gauge the demographic characteristics of NHS Choices commenters.

Practices may not opt out of the feedback system, although they are not obliged to post responses to patient feedback.

The proportion of commenters in the past twelve months who would recommend the practice is calculated and displayed prominently on the practice’s profile pages, and in the summary listing of practices presented when searching the site by postcode or practice name.

The GP-rating function of the NHS Choices site follows the model of a similar system for feedback to NHS hospital trusts; there are now at least several dozen other websites allowing patients to rate individual doctors, practices and hospitals in the UK, US and elsewhere in Europe.

Initial analysis of the comments posted about general practices reveals wide inter-practice variation in use of the site (with the most-commented practice receiving more comments than the next three practices combined) and in patient ratings (from 100% stating they would recommend to a friend to 0%). (Robinson, J. 2011) Practices’ responses to patient comments also vary widely in content and tone, suggesting that there is at the least ambivalence or confusion about the value of NHSC patient feedback.

Other sources of performance data for general practice

Because of the relatively long history of computerisation of UK general practice; as a side-effect of the introduction of a performance-related element to practice income; and because primary care accounts for a large proportion of NHS costs (and hence is a target for cost savings and efficiency measures) English GPs are able to draw on a wide range of sources of measurement and feedback on individual and practice-level performance.

Quality and Outcomes Framework

Introduced in 2004, the Quality and Outcomes Framework (QOF) is a set of indicators covering clinical and organisational domains which aims to measure and reward practices’ use of systems and processes which improve quality of care, and clinical evidence of improved health and reduced risk of disease. (British Medical Association and NHS Employers 2012) Practices score points according to their achievement against the indicators; the yearly points score is converted to a cash payment, proportional to the size of the practice. In 2011-2012, QOF payments made up around 25% of average practice income (Robinson, S. 2012), incentivising high levels of compliance. Individual practices’ QOF achievements are published and are summarised on the practice NHS Choices profile.

The QOF also incentivises the recording and review of “significant events” - such as new cancer diagnoses, medication errors, or near misses where patients may have been subjected to harm - as part of practices’ education and training activity.

GP Patient survey

Currently administered by Ipsos MORI on behalf of the Department of Health, this survey is sent directly to around 1.36 million people every six months, and measures aspects of patient experience including access (ease of making appointments, contacting the practice by telephone etc) and consultations with doctors and nurses. The results are published at practice level, and are summarised on each practice’s NHS Choices profile.

Local Surveys and Patient Participation Directed Enhanced Service

Practices may also commission their own internal surveys, and are currently rewarded for doing so, and for setting up a patient reference group (PRG), representative of the practice population, which is intended to feed back individual views on the practice’s services, and to reach agreement with the practice on plans for quality improvement. (NHS Employers)

Complaints procedure

In common with other NHS bodies, general practices must follow specific procedures when receiving and responding to patient complaints (specified in The Local Authority Social Services and National Health Service Complaints (England) Regulations 2009). These procedures specify time limits for acknowledging and responding to complaints; recording complaints and outcomes; and escalation of complaints where local resolution is not possible. The QOF incentivises practices to review at least yearly patient complaints and share “general learning points” with the practice team.

Audit

In addition to the nationally- and locally-driven measures of quality discussed above, doctors are also encouraged themselves to design and carry out audits of their own clinical practice. (GMC 2009 para. 14c)

Appraisal and revalidation

Since 2002 UK doctors have been required to participate in a yearly structured appraisal of all aspects of their professional practice. Until 2012 this was a largely formative exercise, focussed on personal development and training needs, and making plans for improvement and action in the coming year. This generally included discussion of quality-improvement activity such as audit, complaints and significant event analysis. From 2013, to support the new system of revalidation, appraisal processes will be “strengthened” and will require doctors to collate in a more systematic way evidence of participation in clinical audit, and (an innovation for many doctors) the commissioning of a multi-source feedback (MSF) exercise wherein clinical and non-clinical colleagues are asked to give structured evaluations of a doctor’s performance, knowledge and skills. Doctors will also be required to commission an individual survey of their patients’ satisfaction. (GMC 2012)

It will be interesting to consider whether and how NHS Choices feedback adds to the varied and extensive amount of performance and quality data already available to English general practices. The generalisability of this study’s conclusions will also need to be assessed with this in mind; online patient feedback in health systems with less routinely-available quality data may be proportionately more important as a source of information on performance.

Literature review

Search strategy

Initial keyword searches were run in several generalist bibliographic databases, including PubMed, Scopus, JSTOR, Zetoc and Google Scholar. Keywords used included “NHS Choices” and “Trip Advisor” to identify studies dealing specifically with the issue at hand; and Boolean searches combining more general terms, such as “Quality Improvement AND (Primary Care OR General Practice)” and “Feedback AND Quality Improvement.” Database searches were also run using controlled terminologies (such as MeSH subject headings in PubMed) but these did not yield any additional relevant citations.

The bibliographies of retrieved articles were hand-searched for further relevant publications; online citation analysis tools were used to find newer publications which had cited the initially-retrieved articles.

Further articles were identified in discussion with tutors and peers.

Social and governmental policy context

The introduction of a system which allows patients to comment on and grade the quality of their medical care; which publishes these comments with minimal intermediation; and which aims to stimulate response from the medical care provider can be located within several strands of public policy theory that have been developed in the last three decades.

The ascendancy of market-based ideologies in many Western countries in the 1980s brought about criticism of many of the services hitherto delivered largely by the state, such as health, public housing, and the public utilities. Such services were seen by proponents of the New Public Management (NPM) (Hood 1991) as being inefficient and ineffective, because of such failings as lack of public scrutiny; reliance on monopolies and coerciveness; complex, opaque or poorly-defined objectives; and capture of institutions by self-interested professionals and bureaucrats. In order to reorient such services towards improved outcomes and efficiency, governments introduced policies of “disaggregation, competition, and incentivization” (Dunleavy et al. 2005). In the NPM, the service user is idealised as the main driver for improvement through competition: informed and rationally choosing amongst service providers, the citizen-as-consumer rewards good services and penalises poor ones by bringing payment with them. In this conception, the more information about a service a consumer has, the more discerning will be their choice.

Bejerot & Hasselbladh (2001) identify a potentially fatal problem for the NPM as applied to health care: ever since patient satisfaction surveys began to be widely used in the 1970s, most respondents reported high levels of satisfaction most of the time. Such a placid customer-base would not provide the pressure needed as an impetus for improvement and reform. Hence, they argue, ever-more complex and leading survey questions are used to tease out small areas of concern, resulting in the artificial construction of the ‘dissatisfied patient’ required by ideology to discriminate between good and poor quality services. We might suggest that such a constructed notion is given flesh by the use of star-ratings, league-tables and similar methods which, deliberately or not, might have the effect of stimulating patients’ dissatisfaction with their local services which might not otherwise have been felt.

The NHS Choices commenting system, then, can be placed firmly into this theoretical framework - explicitly associating patient feedback with patient choice, and eliciting areas of dissatisfaction with the aim of thereby driving improvement in services.

The central tenets of NPM have been challenged by, appropriated by, or evolved into, newer prescriptions for public service reform, such as Giddens’ (1991) Third Way; Public Value Theory (Moore 1997) and Digital Era Governance (Dunleavy et al. 2005). However, user-satisfaction remains an important metric: specific to the question at hand, Adams (2011), in conducting a discourse analysis of patient-rating websites (though not NHS Choices), identifies the ‘Reflexive Patient’ who is empowered (or encouraged, or even coerced) to participate in the governance of health providers and systems.

Specifically in England, gathering and publishing patient feedback has been driven by the policy of the present and previous governments to inform and increase patient choice in the NHS (Secretary of State for Health 2006 ; Coulter 2010), and more broadly is in line with government plans to increase the effectiveness of public services by vastly increasing the amount of data which is published (Cabinet Office 2009, 2012). These plans can be seen to accord with the direction of Western public policy described above.

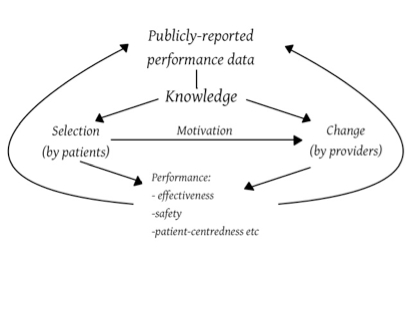

Applying these concepts to healthcare, Berwick et al. (2003) formulated a model of how publishing data about clinician and organisation performance was imagined to improve performance, effectiveness, safety and so forth, by direct effects on providers and through stimulating choice by patients (see figure 1 below). However in reality Berwick et al. found that neither side of the model worked reliably, because of a lack of accessible, reliable and actionable measures of quality (for both patients and providers), and because of the lack of capacity of patients to make ‘rational’ choices (because of lack of information, or emotion, or fear for instance) and of organisations to make meaningful changes (because of cultural, political and resource constraints).

Figure 1 Redrawn from Fung et al. (2008) after Berwick et al. (2003)

Feedback - theoretical context

In the quality improvement literature, the term [feedback] can refer to a wide range of methods used to provide practitioners and organisations with data relating to their own performance. Examples include computer alerts at the point of prescribing; presentation of audit findings (as evaluated in Ivers et al., 2012, for instance); and publication of national performance data benchmarked against similar organisations.

For our purposes, the effects of relatively unstructured feedback from patients about practice performance can be analysed by reference to the specific sense of feedback used by educationalists, and most often applied to situations where learners receive feedback on their performance from peers and teachers: “information provided by an agent (e.g., teacher, peer […]) regarding aspects of one's performance or understanding” (Hattie and Timperley 2007).

The foundational model of educational feedback in medicine was developed by Pendleton et al. (1984). Often referred to as Pendleton’s rules, the model holds that feedback is most effective at improving practice when: the recipient wants and is ready for feedback; the learner, followed by the observer, states what went well; then the learner, again followed by the observer, identifies what could be improved; and a plan for action is agreed.

While Pendleton’s Rules have been criticised for being overly-rigid, or at least prone to being too rigidly-applied (for example Walsh 2005), and they have been extended and made more goal-oriented and learner-centred (e.g. Kurtz and Silverman, 1996; Hattie and Timperley, 2007), the basic idea remains current.

Concerns about NHS Choices

There has been heated debate about the possible effects of doctor-rating websites, with professional bodies such as the BMA (2009) and doctor-commentators (e.g. McCartney 2009) raising concerns about the risk of a small number of self-selecting patients having a disproportionate effect on doctors’ behaviour through their predominantly negative online comments. McCartney points out that some negative feedback from patients may stem from good practice, such as refusing to prescribe unnecessary medicines, and worries that patient comments may falsely reassure ‘bad’ doctors, or cause ‘good’ doctors adversely to alter their practice.

After the NHS Choices GP comment system went live, reports in the medical and lay press focussed on GPs’ concerns about accuracy and fairness, with GPs quoted describing the system as “demoralising, destructive” (Anon. 2011b) and alleging that comments were often “malicious” or “fictitious” (Leach 2012). Practice staff frustrations with anonymity and the perceived hostile tone of comments were highlighted in reports of the sacking of a practice manager whose responses to patients were considered to be unacceptably rude (Anon. 2011a). Subsequently the BMA published brief guidance for practices on how to respond to NHS Choices feedback, with an emphasis on being constructive and avoiding defensiveness. (Barr 2011)

How well can patients rate their own care?

In investigating whether online patient feedback can be used in quality improvement, we need to consider how well-correlated patient satisfaction is with the quality of their care. The current research evidence is somewhat discouraging: Howell et al. (2007) found that satisfaction was correlated with some measures of the good organisation of stroke care, and negatively correlated with others, while Chang et al. (2006) found no correlation with the technical aspects of the care of vulnerable older patients.

More recently, and important for our purposes, the NHS Choices recommender score (the proportion of commenting patients who would recommend a hospital to a friend or relative) has been found to be correlated with some whole-hospital quality measures including standardised mortality rate (SMR) and the rate of emergency readmission within 30 days of discharge . Similarly, patients’ perceptions of the cleanliness of a hospital were negatively correlated with rates of Methicillin-resistant Staphylococcus aureus and Clostridium difficile infection. (Greaves et al. 2012b)

The satisfaction of primary care patients in a deprived area of Glasgow was largely linked to interpersonal attributes, and patients generally assumed that their doctor was competent (Mercer et al. 2007). An unwonted emphasis on comparing satisfaction to supposedly objective measures of quality of care may, of course, risk discounting subjective aspects of care which are nevertheless very important to patients: we would hope that doctors would not be satisfied with providing technically excellent care while failing to attend to patients’ dignity or need for information, for example.

We need also to be wary of factors which confound patient satisfaction: age, gender, employment and marital status, and long-term limiting illness are all strongly correlated with satisfaction (Venn and Fone 2005). It may well be that patient satisfaction measures need to be taken into account alongside objective measures of quality, rather than being used as proxies for them. Certainly it seems that doctors are poor at estimating their patients’ satisfaction with individual consultations (McKinstry et al. 2006); perhaps not surprisingly if, as identified by Winefield et al. (1995), GP and patient satisfaction are determined by largely disparate criteria.

Broadly, these studies suggest that patient satisfaction is correlated with aspects of healthcare which are both visible to patients and perceived as being salient to their care - for example, stroke patients may have more readily appreciated the value of being assessed by a physiotherapist than of being weighed; primary care patients value seeing a regular GP and longer consultations - which has implications for the present study, given that quality improvement activities such as audit and significant event analysis (National Patient Safety Agency 2005) are unlikely to be visible to most patients, and may take doctors’ time away from seeing patients.

Effectiveness of giving feedback on performance

Attempts to assess the effect of feedback to doctors from patients suffer from the difficulty of designing studies which can capture the complex nature of patient-feedback interventions (Reinders et al. 2011): studies are often of limited quality, and systematic reviews are limited by the heterogeneity of primary research.

Systematic reviews of the effect of feedback on doctors’ interpersonal (Cheraghi-Sohi and Bower 2008) and communication skills (Reinders et al. 2011) found some evidence that patient feedback could improve skills, but positive studies tended to be non-randomised and/or uncontrolled and qualitative in design, and tended to examine doctors’ knowledge and valuation of such skills (i.e. Kirkpatrick levels 1-2 (Kirkpatrick 1967)) rather than changes in their actual clinical performance. However, Reinders et al. did find that doctors, particularly those who are performing less well, were more likely to respond to feedback from patients than from their better-performing peers.

It seems likely that the impact of patient feedback is increased by organisational factors such as the degree to which leaders and senior clinicians support the use of feedback in QI (Davies and Cleary 2005) and the infrastructure and resource that is made available to support doctors receiving feedback, with interpretation and opportunity for guided reflection increasing GPs’ positive attitudes to patient evaluations, job satisfaction and intention to change practice. (Heje et al. 2011)

One of the few controlled trials to have been conducted in this area (Vingerhoets et al. 2001) found that feedback of patient evaluations did increase GPs’ self-reported intention to improve their practice, but post-feedback patient evaluations did not improve—suggesting that the feedback was not sufficiently action-oriented to drive quality improvement. A related study of this trial’s participants (Wensing et al. 2003) examined Australian GPs’ attitudes to receiving patient feedback—again, one of the few such studies. While most participants were initially keen to receive feedback, those GPs in the intervention group subsequently felt that patient feedback had less practical relevance to their work, and were less likely to see reasons to change anything at all in their practice. Barriers to accepting and using patient feedback included the perceived difficulty of meeting all of their patients’ needs (the implication being that GPs didn’t want to be burdened by learning about new ways in which they were failing to do so); the considerable time and energy required to synthesise and act on feedback; and perceived difficulty in interpreting the significance of survey results.

These findings align with Kluger and DeNisi’s conclusion (1996) from analysing a much wider literature on occupational feedback and performance, that feedback has less effect as the focus of attention moves up Kirkpatrick’s hierarchy “closer to the self and away from the task,” given its increasing personalisation and a consequent increase in subjects’ defensive reactions. Since GPs in Australia as in the UK are closely identified with organisational as well as interpersonal aspects of care, it could be that most of the domains evaluated by patients were felt by the participants to be related to their identity as GPs, and hence criticism might have been taken much more personally as a result.

A key systematic review (Veloski et al. 2006) identified characteristics of feedback which were associated with positive effects on doctors’ behaviour. While specific patient-sourced feedback does not seem to have been considered, the perceived authority of the feedback source was important, with more authoritative sources (such as employers or peer groups) being more influential than research teams, for instance. We can speculate that on this axis, patients would be regarded as being less-authoritative still. Of particular note for our purposes, Veloski et al. found that comparing doctors’ performance to local statistical norms or professional standards had little or no effect on performance; nor did making performance ratings publicly-available. These findings tend to be confirmed by more recent systematic reviews of clinical governance activity (Phillips et al. 2010, concluding that targeted, peer-led feedback improved clinicians’ performance) and publication of performance data (Fung et al. 2008, finding only small and inconsistent effects on patient choice, clinician QI activity and measured improvements in quality).

Specific research on doctor-rating websites

Most of the research which has been carried out on patient rating websites has focussed on usability and the content of patients’ comments.

Lagu et al. (2010) reviewed the user-friendliness of 33 US-based sites (which was generally poor), and analysed the distribution of positive and negative ratings. A large proportion of ratings (88%) were judged as being positive, which contradicts concerns such as those expressed above; however, the average number of doctor-ratings per site was low, thought by the authors to be related to poor user-friendliness, which limits the generalisability of their findings. Strikingly, the authors reported finding several positive comments which appeared to have been written by or on behalf of the doctors themselves, which may further have skewed the distribution of ratings.

Lopez et al. (2010) made a thematic analysis of the comments left on two rating websites, again the majority of which were positive. They found that the majority of positive comments were “global” - that is, they discussed the doctor’s positive attributes in general terms - while the negative comments tended to report more information about a specific clinical encounter. This finding has relevance for the present study: if situation-specific feedback is, as seems likely, more impactful and more likely to change a doctor’s behaviour, we need to consider whether negative comments (tending to be more specific) have a greater effect on behaviour change than do positive ones.

Adams (2011), in a similar but more in-depth thematic analysis finds that patients often make sophisticated and nuanced comments about their healthcare experiences: “Commenters tend not just to opine, but to openly reason out their comments in a personal frame of reference.” (p. 1074) This suggests that online patient feedback may indeed be a potentially rich source of data about patients’ experiences of the quality of their care.

Reimann and Strech (2010) investigated the structured questions posed to patients by doctor-rating sites, and cross-referenced them with a set of validated patient-satisfaction questionnaires to assess whether the sites set out to elicit feedback in similar domains to the questionnaires. Few of the sites studied covered more than half of the identified domains in their structured questions; it seems likely that many designers of such sites do not take into account academic research into patient satisfaction measurement, and indeed it is questionable as to whether they have any commercial imperative to do so.

The NHS Choices numerical ratings of various aspects of patients’ experience in hospital were found to correlate well with the results of a national, randomly-sampled postal survey of recent inpatients (Greaves et al. 2012a). This suggests that concerns about the self-selected nature of online commenters may be may be overstated; however, little is known about the characteristics of patients who choose to leave feedback on NHS Choices about hospitals, and it is reasonable to question whether they are similar as a group to those patients rating their general practice experience; hospital admission being much rarer, more significant, and likely much more fraught, experience than visiting a GP.

Comparison with other industries

Online customer feedback is a common feature of many industries, including retail (product reviews on Amazon, for instance) and travel (for example TripAdvisor, which is often cited disapprovingly in relation to NHS Choices), and in most sectors has been used for considerably longer than in healthcare. It would be instructive to compare the processes and outcomes involved with working with online user feedback across industries; however, the literature search conducted for this study found only unreferenced ‘how-to’ guides and other instructional material; essentially no peer-reviewed literature could be identified. This may be because sectors such as marketing and tourism are not as inclined to conduct and publish research as are healthcare organisations, and may not be as well-resourced to do so.

Summary and research question

Spontaneous, published patient feedback could be a rich source of data for practices to use in their quality-improvement activity (analogous to reviewing formal complaints and significant event analysis) but it is not known whether practices do in fact use online feedback in this way. It is also not clear in what ways NHSC feedback might differ in nature from the other sources of patient satisfaction available to practices. In addition, the literature is equivocal as to the likely impact of such feedback on doctors’ behaviour and performance. There is also little primary research asking how organisations and individual clinicians use such patient feedback; still less which considers the kind of reactive, qualitative feedback which comes from patients’ comments on NHS Choices.

Given these gaps in our knowledge, there is a need for research which describes the way in which GPs and practices engage with their NHSC feedback, and attempts to prise ajar the ‘black box’ containing the processes, transactions and assumptions that are in play. The primary research question for the present study is therefore: “How do General Practices in England use patient feedback posted to the NHS Choices website in their quality improvement activity?”